-28

Artificial Intelligence-Enabled Intelligent Assistant for Personalized and Adaptive Learning in Higher Education

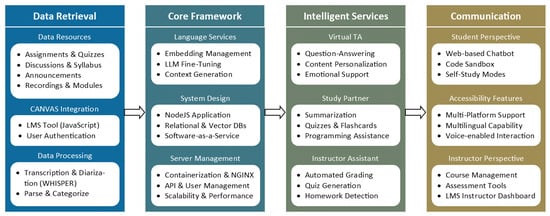

www.mdpi.comThis paper presents a novel framework, artificial intelligence-enabled intelligent assistant (AIIA), for personalized and adaptive learning in higher education. The AIIA system leverages advanced AI and natural language processing (NLP) techniques to create an interactive and engaging learning platform. This platform is engineered to reduce cognitive load on learners by providing easy access to information, facilitating knowledge assessment, and delivering personalized learning support tailored to individual needs and learning styles. The AIIA’s capabilities include understanding and responding to student inquiries, generating quizzes and flashcards, and offering personalized learning pathways. The research findings have the potential to significantly impact the design, implementation, and evaluation of AI-enabled virtual teaching assistants (VTAs) in higher education, informing the development of innovative educational tools that can enhance student learning outcomes, engagement, and satisfaction. The paper presents the methodology, system architecture, intelligent services, and integration with learning management systems (LMSs) while discussing the challenges, limitations, and future directions for the development of AI-enabled intelligent assistants in education.

I’m confident we should start seeing stuff like this soon. The upcoming ChatGPT model (forgot the version name) is doing recursive prompts for itself, where it breaks down a big task into smaller ones, then runs and reruns those tasks before coming up with an output.

Right now, all LLMs just do a single pass and spit out the output. They don’t reason with themselves like we do yet. It’s kinda like what we do in quiz competitions or something, where we immediately shout “blue” when asked “what color is the sky”. However, when asked something more complicated, we don’t just answer quickly based on intuition, do we? We pause, think, rethink, look for counter arguments, patch holes in our statements and so on.

This is the ability that LLMs lack now. However, very soon they will be able to do that. This just opens up a crazy amount of things that they can do. Take code for example. Right now, LLMs just spit out code without seeing if it works or not. Now, they’ll be able to run it themselves, look for errors, bugs and so on, fix them and finally submit the output code.

Stuff like this would supercharge them quite a lot. Now, I know that I’m going to be downvoted to hell for talking about AI, cuz lemmy hates it. Mark my words though - you’ll be able to do A LOT using LLMs because of this.

AI is going to exist and improve like crazy no matter what. The leftist position on this should be public ownership over these models and NOT pretending that they don’t exist.