Where do you get this attitude that everything should be provided to you for free and you’re entitled to it?

From (non-capitalistic) utopic ideas, where humans try to be excellent to each other.

Where do you get this attitude that everything should be provided to you for free and you’re entitled to it?

From (non-capitalistic) utopic ideas, where humans try to be excellent to each other.

And on the flip side, when you’re not spending much money, you’re being accused of ruining the economy. Especially if you like avocados.

My point is, that the following statement is not entirely correct:

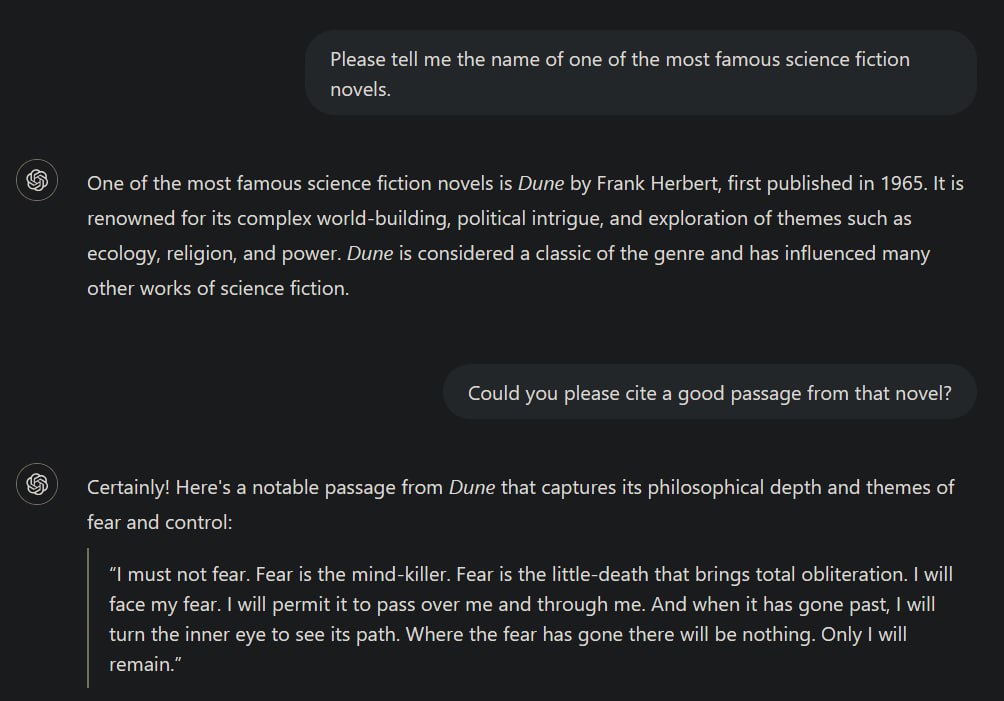

When AI systems ingest copyrighted works, they’re extracting general patterns and concepts […] not copying specific text or images.

One obvious flaw in that sentence is the general statement about AI systems. There are huge differences between different realms of AI. Failing to address those by at least mentioning that briefly, disqualifies the author regarding factual correctness. For example, there are a plethora of non-generative AIs, meaning those, not generating texts, audio or images/videos, but merely operating as a classifier or clustering algorithm for instance, which are - without further modifications - not intended to replicate data similar to its inputs but rather provide insights.

However, I can overlook this as the author might have just not thought about that in the very moment of writing.

Next:

While it is true that transformer models like ChatGPT try to learn patterns, the most likely token for the next possible output in a sequence of contextually coherent data, given the right context it is not unlikely that it may reproduce its training data nearly or even completely identically as I’ve demonstrated before. The less data is available for a specific context to generalise from, the more likely it becomes that the model just replicates its training data. This is in principle fine because this is what such models are designed to do: draw the best possible conclusions from the available data to predict the next output in a sequence. (That’s one of the reasons why they need such an insane amount of data to be trained on.)

This can ultimately lead to occurences of indeed “copying specific texts or images”.

but the fact that you prompted the system to do it seems to kind of dilute this point a bit

It doesn’t matter whether I directly prompted it for it. I set the correct context to achieve this kind of behaviour, because context matters most for transformer models. Directly prompting it do do that was just an easy way of setting the required context. I’ve occasionally observed ChatGPT replicating identical sentences from some (copyright-protected) scientific literature when I used it to get an overview over some specific topic and also had books or papers about that on hand. The latter demonstrates again that transformers become more likely to replicate training data the more “specific” a context becomes, i.e., having significantly less training data available for that context than about others.

When AI systems ingest copyrighted works, they’re extracting general patterns and concepts - the “Bob Dylan-ness” or “Hemingway-ness” - not copying specific text or images.

Okay.

The triangle in the background sky is Pascal’s triangle.

The position with the vegan cats is basically indefensible.

What do all organisms, including animals, need to properly maintain their metabolism?

Nutrients.

What are nutrients?

A bunch of different chemicals.

Depending on the specific organism, another set of nutrients is required, also varying in amount of course.

All required nutrients for humans at least can be obtained or synthesized from non-animal compounds.

From that simplified perspective, it’s absolutely rational to explore how we could feed animals like cats on a purely vegan diet.

But it’s certainly nothing which should be left to do for the layman alone, as veterinarian care is advisable if harming the animal should be avoided.

But, consider you’re stranded in the wild. All technology lost due to an accident. It’s just you, nature and your skills. How will you know then for how many days the melons you’ve foraged will suffice if you’ve found N of them and eat one a day? /j

deleted by creator

Communism is an idea. Just as capitalism is.

What fucked up things people do with that is an entirely different problem.

You can have an oppressive communist regime. You can also have an oppressive capitalistic regime. Both could be really good and beneficial for everyone.

Heck, even a dictatorship could be a good governing system given a wise and benevolent dictator who has the best of all in their mind.

The problem with all of these economic, governmental and societal systems are humans. All of those systems require a specific set of properties from humans in order to work well. The problem is, that not all humans meet those requirements. There is no system which takes humans as they are, with all their good qualities and all of their faults, to get the best out of humanity for humanity.

From an engineering perspective, this is really stupid. But it’s immensely difficult as well. There are no simple solutions to the complexity of humans and their interactions. Which is why systems with self-correcting mechanisms might have an advantage. For example, democracies. However, those too have many pitfalls to address.

Point is, communism is not inherently bad. It can be good, if no one exploits it. Even capitalism can be good, if no one is greedy and exploits others. There’s a lot of ifs. Improve the system or change it. Whatever might be better. But I don’t think it’s as simple as blaming it all on one core idea of a system itself, rather than to look how badly it was implemented.

Coding is already dead. Most coders I know spend very little time writing new code.

Oh no, I should probably tell this my whole company and all of their partners. We’re just sitting around getting paid for nothing apparently. I’ve never realised that. /s

While I highly doubt that becoming true for at least a decade, we can already replace CEOs by AI, you know? (:

https://www.independent.co.uk/tech/ai-ceo-artificial-intelligence-b2302091.html

researchers are paid by the university

Not necessarily. A lot are paid by external research grants.

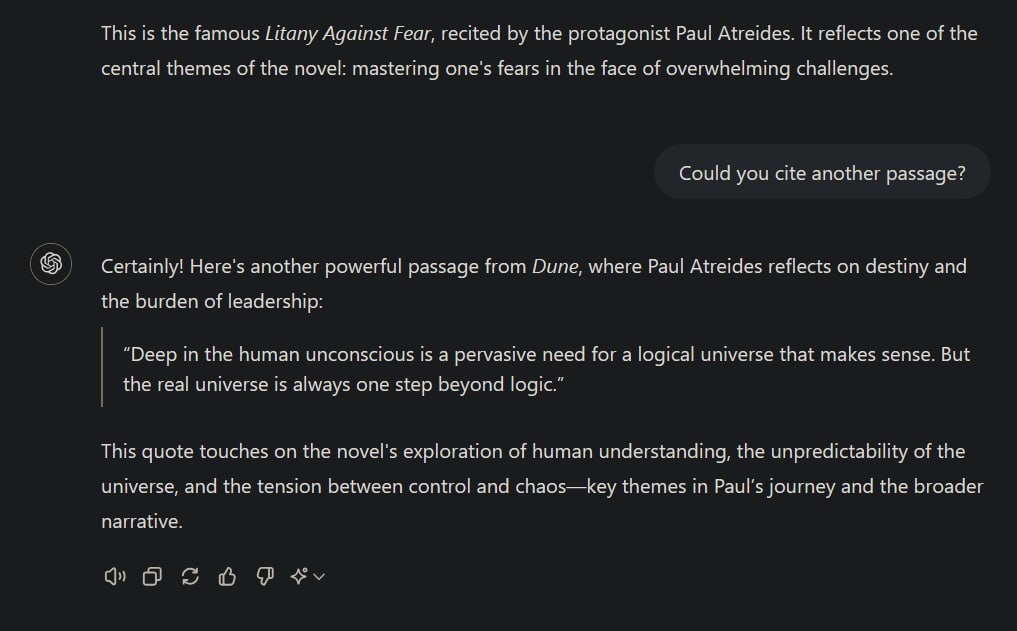

Another one, Frontiers:

Doubt that for the next decade at least. Howver, we can already replace CEOs by AI.

A chinese company named NetDragon Websoft is already doing it.

https://www.independent.co.uk/tech/ai-ceo-artificial-intelligence-b2302091.html

If you want to play with fire, Mr. Amazon Guy, don’t be surprised to get burned. :]

How did it end? Did they date then?

Which is okay. Focusing on a happy life is imho better than to strive for becoming an efficient worker in some way or another. There is a lot more to life than this.

I am not alone it seems.

Isn’t it sad and weird if people get mental or physical health problems like addictions and the flu? /s

I feel this. Fell into a similar rabbit hole when I tried to get realtime feedback on the program’s own memory usage, discerning stuff like reserved and actually used virtual memory. Felt like black magic and was ultimately not doable within the expected time constraints without touching the kernel I suppose. Spent too much time on that and had to move on with no other solution than to measure/compute the allocated memory of the largest payload data types.