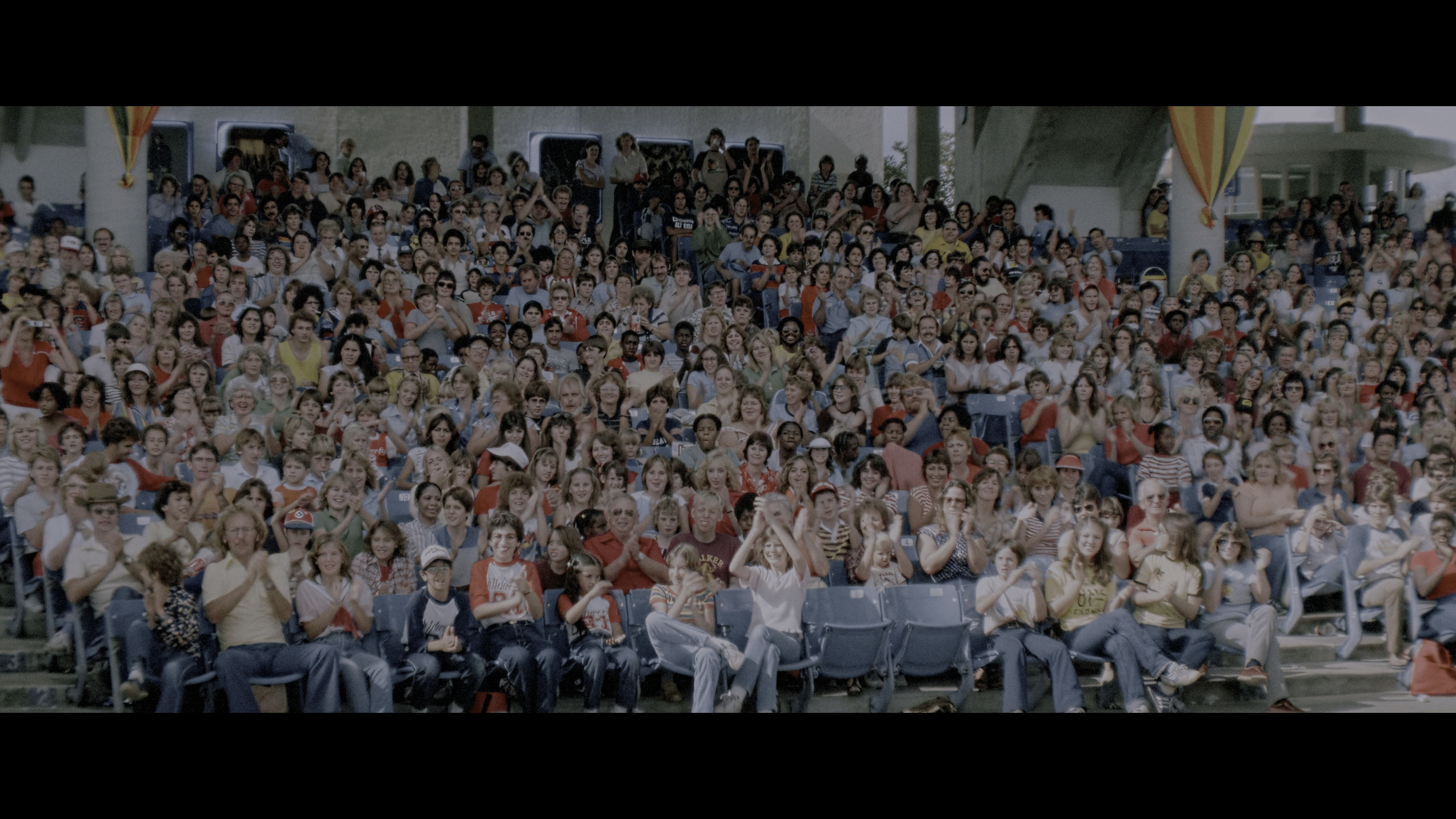

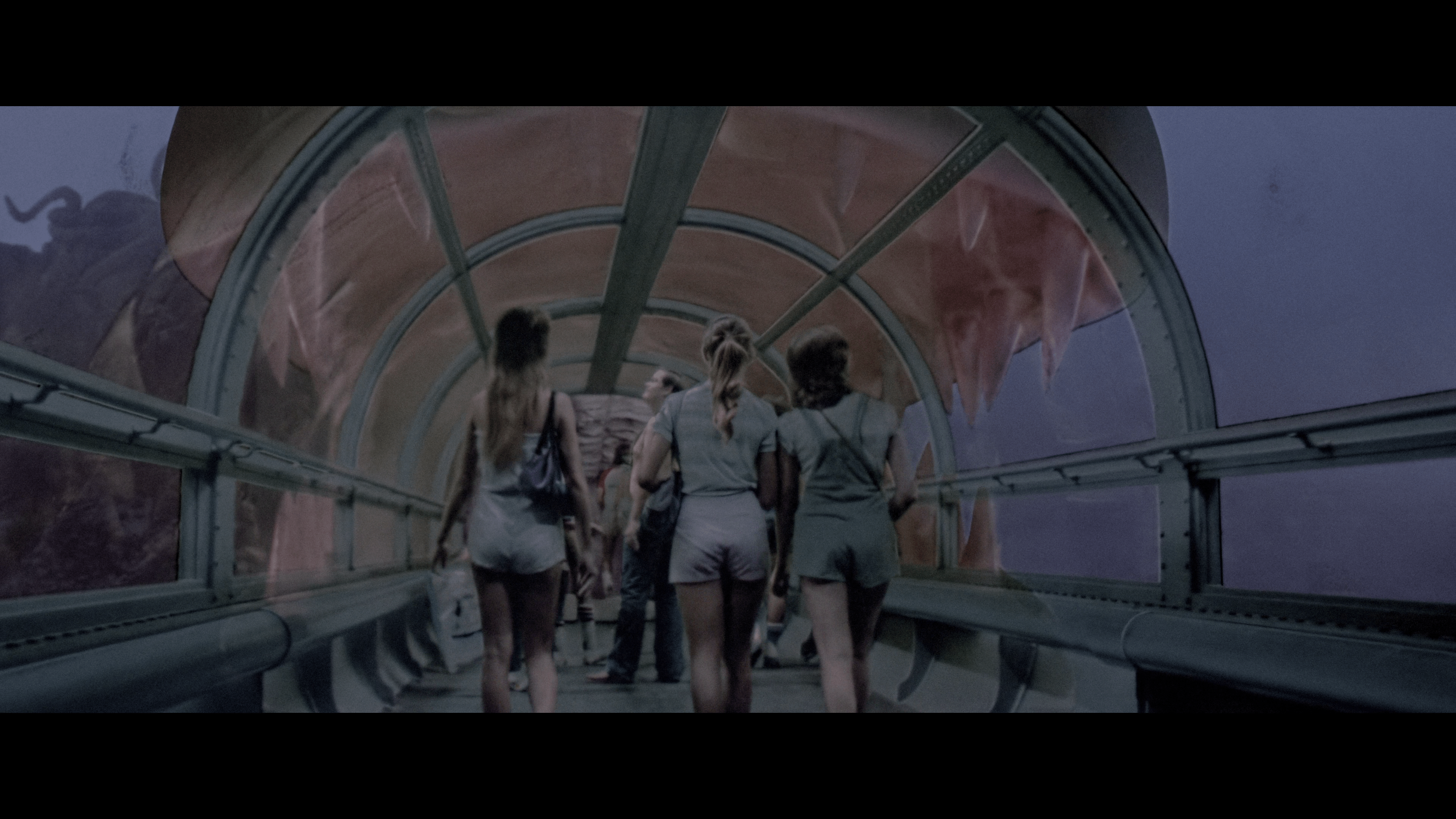

Jaws 3 might be the worst 4K ever released as of this very moment. It’s that bad and that horribly butchered with AI and awful DNR/Color Timing. The 2D version always looked a bit off due to the way that the film was shot specifically for 3D but with this abysmal 4K transfer it’s limitations and issues are blown up and become glaringly obvious. Then on top of that you have AI interperetation the likes not even god has seen.

The film legitimately looks like it was created with Midjourney on more than one occasion. Or entire frames look like the washed out color tone SNL bumpers. Remember those from the 70’s and 80’s that used to show the host for the night? Entire sequences of the film look like that. No film should look like that in 4K. People look like paper cut outs in more than one frame. This is abhorrent.

I said this in another comment thread but will post here too:

The bigger question is why is the upscale suddenly so much worse than it was before?

Plenty of films finished at 2K had 4K UHD discs put out that were nothing more than upscales with HDR grades applied, but they were never this bad. It’s like AI upscales became a thing and the studios tossed out whatever previous methods they used, that seemingly worked JUST FINE, in favour of new technology that has GLARING flaws such as this.

If I’m not mistaken they’ve been using AI upscaling in remasters for 10 or so years now, so it’s not like this is something new. If it’s honestly that bad then I’m guessing they tried to use “generative” AI like stable diffusion to upscale instead of using something hyper-focused on upscaling like waifu2x.

Upscaling has always had questionable results. There are some serious uses: for example, drawings upscale well if there is no more information than pixels anyway. For example, consider a low-res flag of Japan, say 24x16. You know that there are no vector strokes smaller than 10 pixels and the only such stroke that matches pixel values is a circle, so you can redraw it successfully at any resolution. It’s the Whittaker-Nyquist-Kotelnikov-Shannon sampling theorem. Waifu2x will do well too, reinterpreting the image with little distortion.

With photos and video, it’s a lot trickier. Things that appear noisy can be smoothed out or the noise can be sharpened and interpreted as some signal. Same with blur. Legitimate techniques such as averaging static areas in consecutive frames can bring noise down but only to a certain point. You can’t know how to draw a square of 16 pixels if you only know 4 and there is no limit on how sharp the original image could have been. It’s always been guesswork but AI is good at taking context clues compared to naïve sharpening or denoising.